Hi,

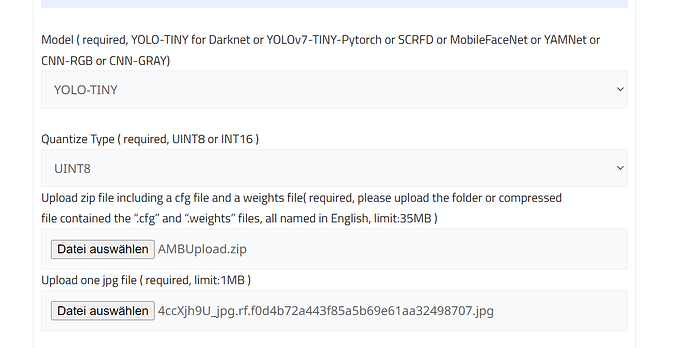

i’m trying to convert a yolov7-tiny trained model (darknet) to a NB file but get and error that it doesn’t work:

Error: Using your cfg and weights files can not export binary file, you can see more details on the attached zip file.

application.zip (26,7 KB)

some "parts" of the export log:

2024-05-26 00:49:40.484591: W tensorflow/stream_executor/platform/default/dso_loader.cc:59] Could not load dynamic library 'libcudart.so.10.1'; dlerror: libcudart.so.10.1: cannot open shared object file: No such file or directory

2024-05-26 00:49:43.791105: W tensorflow/stream_executor/cuda/cuda_driver.cc:312] failed call to cuInit: UNKNOWN ERROR (303)

2024-05-26 00:49:43.791130: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (5fec6c973be1): /proc/driver/nvidia/version does not exist

Can you please check the attached zip/log that came with “your” email and let me know the problem?

I also tried to convert a model that i used in december. In december it worked fine but now i’m getting the error with this as well - so something must be work with your conversion service

Thanks for your help